As the world moves towards smarter and more efficient technologies, LiDAR and its applications have also gained more spotlight. But there also have been many myths about LiDAR technology and its efficacy, relevance, and performance. This blog will discuss six common misconceptions and myths about LiDAR and shed some light on the facts.

1. Myth: LiDAR uses complicated technology

While LiDARs are indeed complex sensors consisting of different hardware components, the underlying working principle is actually quite simple! The sensor uses the time of flight method, akin to the one used by bats with sound waves or Radars with microwaves.

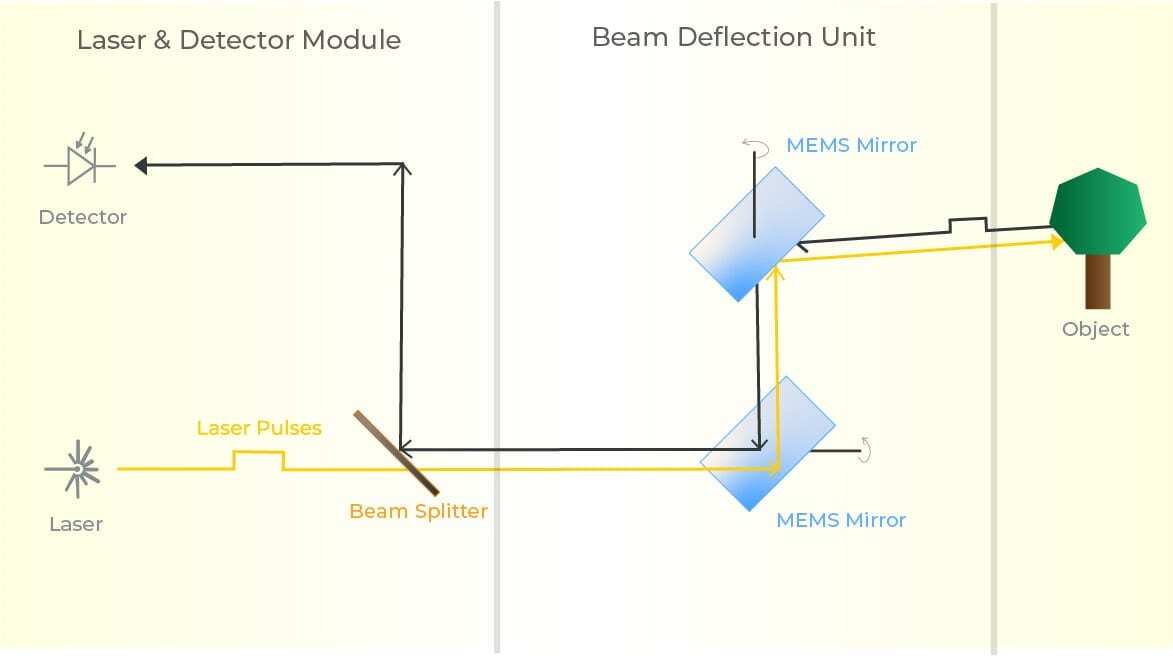

If we break down the sensor into its components, namely the laser, detector, and a beam deflection unit (mirrors in the case of Blickfeld), LiDAR ceases to appear as such a daunting technology. The laser source primarily shoots out laser pulses, deflected by the mirrors upon the scene, and the detector then detects the reflected light. The time between the emission and return of the laser pulse is then used to calculates exact distances.

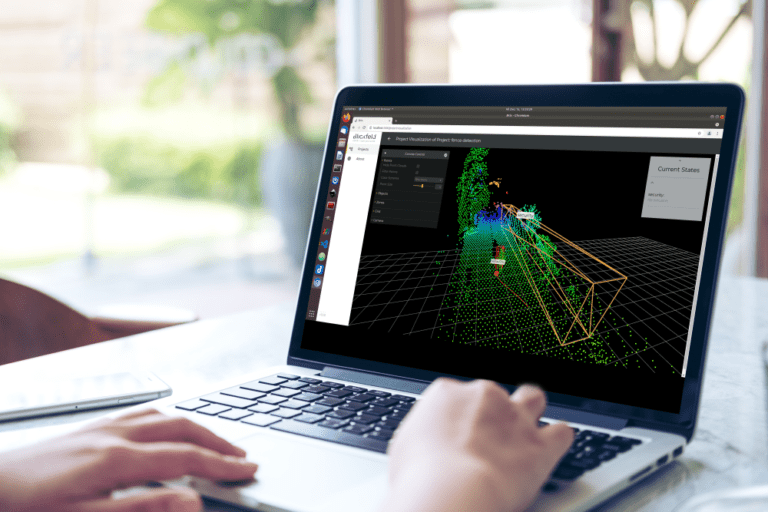

Repeat this process thousands or even millions of times per second, and a precise, real-time 3D map of the environment is generated. This 3D map contains data that is easy to analyze and utilize, for example, for for detecting intrusions in security applications.

The technology was developed in the early 1960s after the invention of a pulsed laser, which uses repetitive light pulses instead of a continuous wave using the same principle.

You can learn more about LiDAR technology and how it operates in this article.

2. Myth: LiDARs are redundant for autonomous vehicle applications

LiDARs are integral to autonomous vehicle applications due to their ability to capture accurate 3D data irrespective of the lighting and weather conditions.

Elon Musk famously disregarded LiDAR’s utility for autonomous vehicles at a conference in 2019, which has bred many myths about LiDAR and their relevance till date. He claimed that LiDARs are made redundant by cameras and complimentary intelligent algorithms and has continued to stand by his stance.

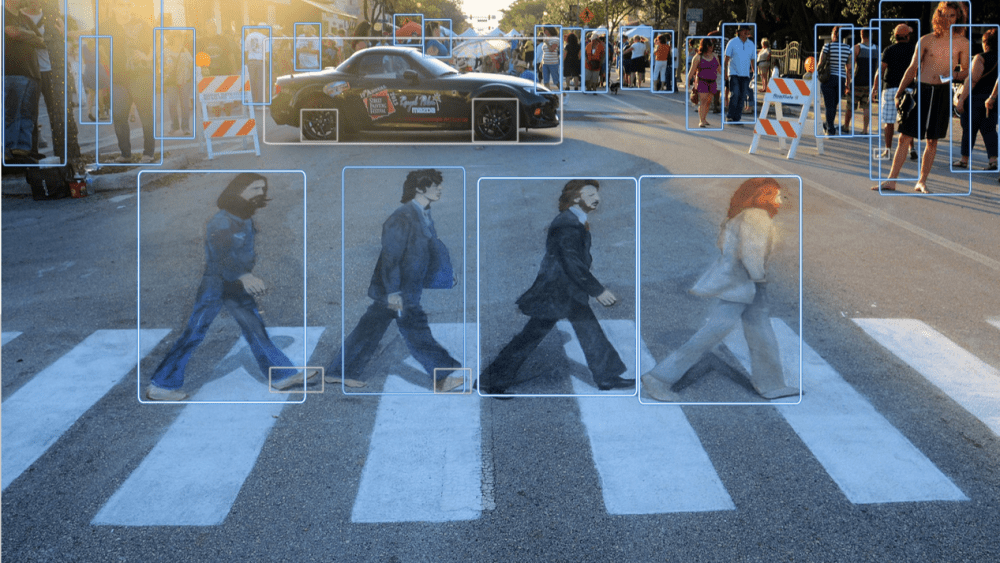

While cameras are required for color vision and use different image recognition techniques, as mentioned above, the glaring drawback of only capturing 2D data makes it susceptible to getting duped by visual illusions and misjudging distances. As few tragic instances have shown, these failures can be hazardous and even fatal.

In contrast, LiDAR can reliably capture data in 3D and identify the exact distance and dimensions, thus leaving no room for interpretation.

Integrating the accurate 3D LiDAR data helps in situations where the cameras can go ‘blind,’ such as taking time to adjust the lighting after exiting a tunnel or detecting objects hidden by obstacles.

In addition, the 2D annotations generated by cameras might appear superficially accurate enough to train the autonomous vehicle’s algorithms. But they still have many imprecisions that reduce the accuracy of the machine learning (ML) models and consequently the vehicle’s ability to perceive, predict, and plan. The machine learning capabilities facilitating autonomous driving need to be scalable and address the ‘long tail’. This means that it’s not enough to just cater for the 95% of the scenarios faced by vehicles on the road, but the ML-based autonomous driving capabilities also need to be trainable for the tricky 5% of the possible cases while continually improving its performance, which requires enormous amounts of training data for a camera-only system.

In comparison, LiDARs can offer more predictive machine learning models while generating higher accuracy training data. Thus, LiDARs are necessary for more assured and robust autonomous driving systems.

3. Myth: LiDAR can be replaced entirely by other sensors

Sensors are not interchangeable and instead need to complement each other in many complex applications.

One of the most common myths about LiDAR is that it’s replaceable by camera or Radar sensors, which emanates from the lack of understanding of how these sensor technologies classify objects in different ways. It is imperative to understand these sensors’ different capabilities and the type of data produced to appreciate their complementary functions.

- Cameras depict a visual representation of the world in a 2D image while providing greyscale or color information, texture, and contrast data. To analyze this data for further application, image recognition software is needed. Since cameras use a passive measuring principle, objects need to be illuminated for detection. Also, two or more cameras are required to create 3D images, along with high computing capabilities.

- Radars measure 3D information and are excellent in determining the object’s distance and speed with great precision. Still, they fail to precisely detect (on a cm-scale) or classify the objects because of the low-resolution.

- LiDARs identify points in 3D and create point clouds from the sensor data. And based on these clusters’ size, objects are precisely detected and can even be divided into various categories such as a person, cars, buildings, etc.

LiDAR gathers highly detailed and reliable 3D information and thereby fills in the shortfalls of other sensor technologies. LiDAR data stands out as it detects and accurately categorizes the most important objects in various environments. Then data from cameras can be used for more in-depth analysis and illustrative representation. Similarly, distance and speed data collected by a Radar can be verified using a LiDAR for greater accuracy. This means that all sensor-based applications of the future will integrate a mix of cameras, radar systems, and LiDARs, along with other sensors.

4. Myth: LiDAR cannot work in poor external conditions

LiDARs can offer a considerable performance advantage over other sensors, like cameras, when compared in poor weather or lighting conditions.

Cameras generally cannot operate without sufficient ambient lighting. In perimeter surveillance or outdoor facility monitoring, for instance, a camera can only see as far as the area is artificially illuminated. In contrast, the range of a LiDAR can be up to several hundred meters regardless of lighting conditions, as it relies on infrared laser beams instead of visible light. This means that a security system equipped with a LiDAR sensor can detect movement or intrusions just as effectively in pitch darkness as in daylight – even when no floodlights or street lighting is present.

When talking about poor conditions like fog, rain, or snow, LiDAR again offers a discernible advantage in performance and can pick up the slack of other sensors, like a camera, in a perception system.

In the rain, LiDARs generally tend to perform better than cameras because of their wide optical beam. This allows the light to pass around the obstructions (such as rain droplets) on the sensor mirror, and thus the LiDAR range remains unaffected to a certain degree. In comparison, the camera’s pixel size is much smaller than the raindrops’ size, which consequently obscures its field of view.

The large optical beam also enables LiDAR to detect multiple returns from different ranges and only process the one with the strongest signal. This would again be useful in bad weather conditions, such as snow, where the reflections off the snowflakes would be ignored, and only the return from a hard object in the background would be processed. Cameras, without any machine learning algorithms, cannot distinguish between the snowflake, a wet lens, or a hard object and will combine all returns to create a distorted final picture.

LiDARs also have shorter exposure times or faster shutter speed (a millionth of a second) than cameras (a thousandth of a second), meaning a raindrop is not detected as a streak across multiple pixels rather in its original shape.

Although LiDAR’s performance also gets negatively affected in conditions like heavy fog since it is an optical device, after all, it is still able to provide a lot more valuable data and operate on longer ranges than its counterpart like camera, as seen in the picture above.

5. Myth: LiDAR sensors are expensive

The introduction of MEMS-based LiDAR sensors has made them easily producible and very cost-effective.

There was a time when the only LiDARs available in the market were spinning LiDARs, which were very expensive and large and therefore not viable to be manufactured in large volumes. So, it is natural that there still remain myths about LiDAR and their high prices. But since the introduction of MEMS (microelectromechanical systems) based LiDARs, the game has truly been turned on its head. The MEMS components are produced from silicon, which makes them easily production scalable and thus very cost-efficient.

Combined with other standard components and a technology that does not need regular maintenance, solid-state LiDARs are becoming much more affordable. In recent years, the costs of these LiDAR sensors have come down from thousands of dollars to a few hundred, and this trend is only going to continue in the future. In fact, medium-range sensors can even be sold at 3-digit prices when produced in large volumes.

6. Myth: MEMS-based LiDARs are not high-performance

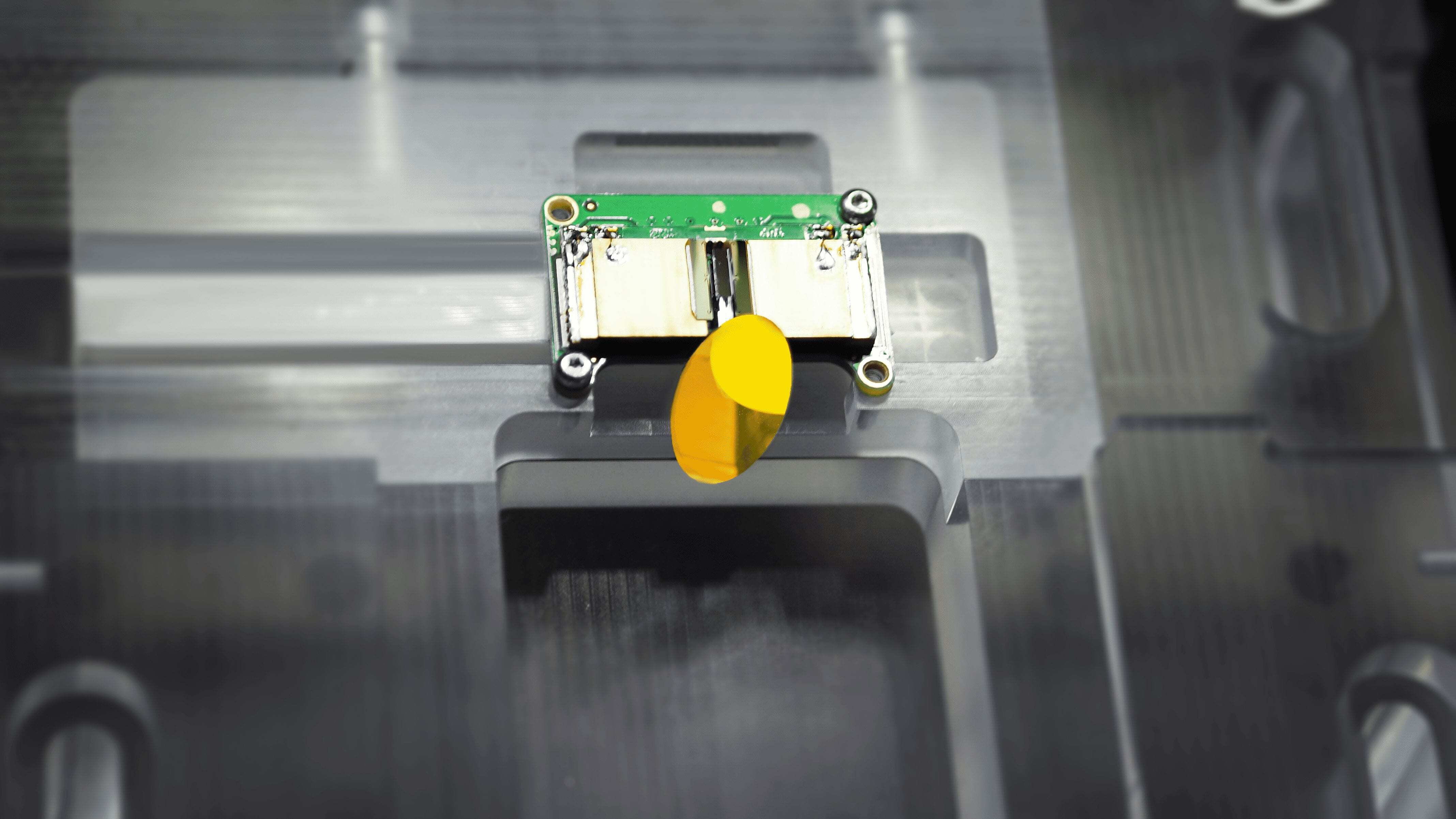

Blickfeld LiDAR’s proprietary MEMS-mirrors and coaxial design enable a high proportion of photons to be directed onto the photodetector, making them very high performance.

While advantages such as high scalability and low cost are widely associated with MEMS based sensors, a wide-spread misconception says that these devices have a poor detection range. This originates from how the MEMS mirrors are usually very small (hence the term “micro” in MEMS). Generally speaking, the larger the mirror size, the greater the area of collection and the longer the range. Blickfeld has developed its own MEMS-mirror with impressive dimensions of more than 10 millimeters, enabling a high proportion of photons to be directed onto the photodetector.

The coaxial design of the Blickfeld sensors also allows very effective spatial filtering, meaning that light photons are collected precisely from the direction they were sent. This minimizes the background light and enables a very high signal-to-noise ratio, increasing the range even further.

Learn more about what makes MEMS-based LiDAR sensors so powerful, robust, and scalable in this blogpost.

These were some of the common myths about LiDAR technology and its applications. Watch this space for the second part of the series, where we unravel and tackle some more misconceptions.