The developments in LiDAR technology have opened up a whole new world of use cases. The ability to collect anonymized distance information as point clouds with centimeter-level accuracy is an exciting prospect. LiDARs usually capture their environment and output the point clouds of the entire scene before any data processing steps. While extremely useful for many applications, the unprocessed point clouds also pose a technological challenge as they require high resources for the transfer, storage, and analysis of the large amount of data generated.

On-device Motion Detection

Blickfeld tackles this problem by introducing an on-device preprocessing algorithm that enables motion detection applications for the Blickfeld smart LiDAR. This is achieved through the subtraction of the static background on the device itself using the high-performance system-on-chip (SoC) integrated within the LiDAR. The new feature allows the extraction of insightful information, specifically dynamic motion detection, early in the processing pipeline and generation of pre-processed point cloud data ready to be analyzed and used in the respective applications.

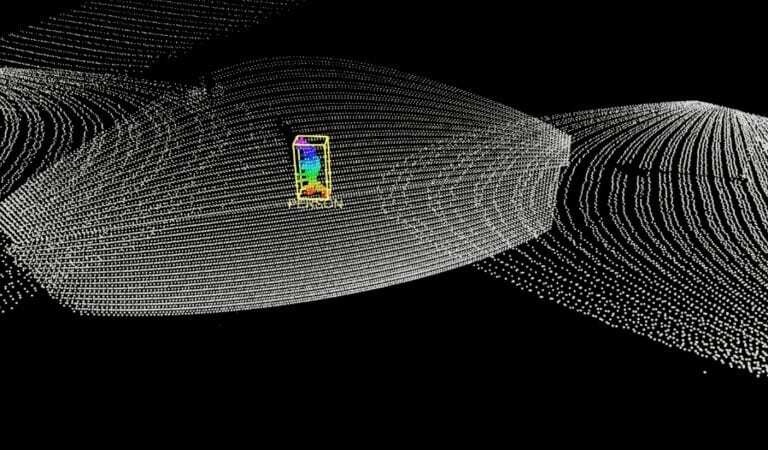

Although it depends on the amount of activity in the scene, generally the algorithm leads to a dramatic reduction in the amount of data to be transmitted, especially when the scene is mostly static. As in the example, >98% lesser data has to be transmitted, as the total number of data points is reduced from 8800+ to merely ~ 163, which has a linear reduction in the memory and transmission time.

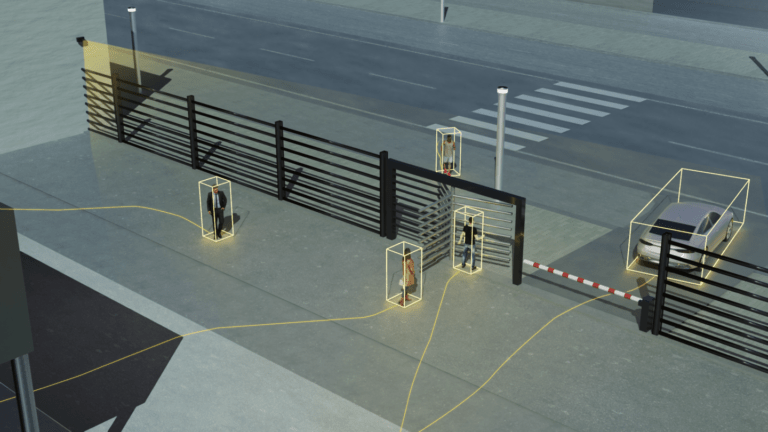

This enhances LiDAR sensors’ ability to operate in applications involving recording, tracking, and analysis of moving objects in a wide variety of environments. Applications that benefit immensely from the feature include intelligent visual surveillance and tracking systems for traffic and public places such as roads, train stations, and airports, as well as many security use cases.

Background Subtraction Working Principle

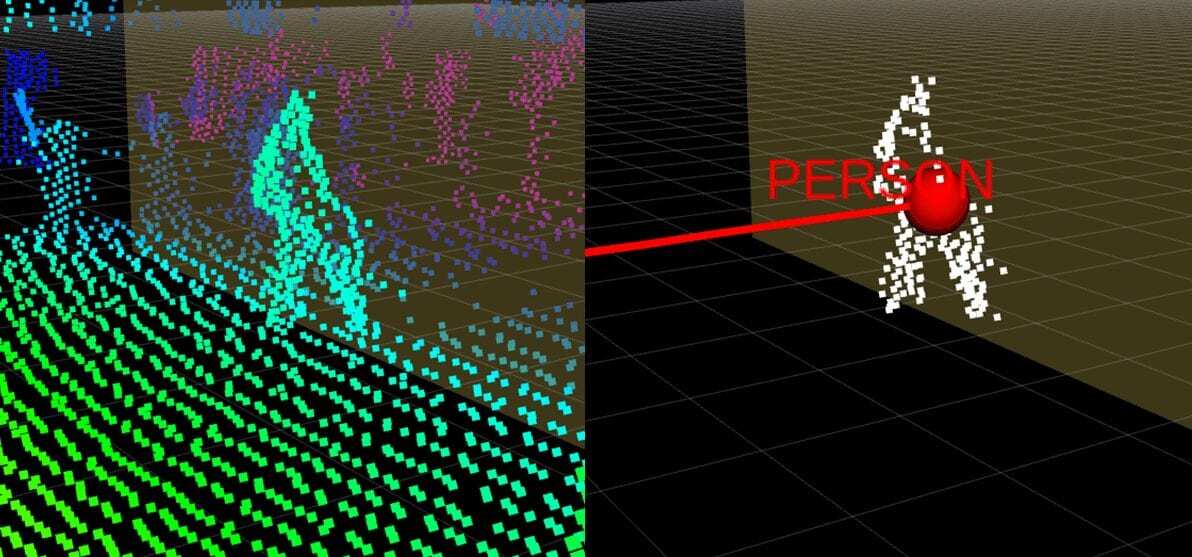

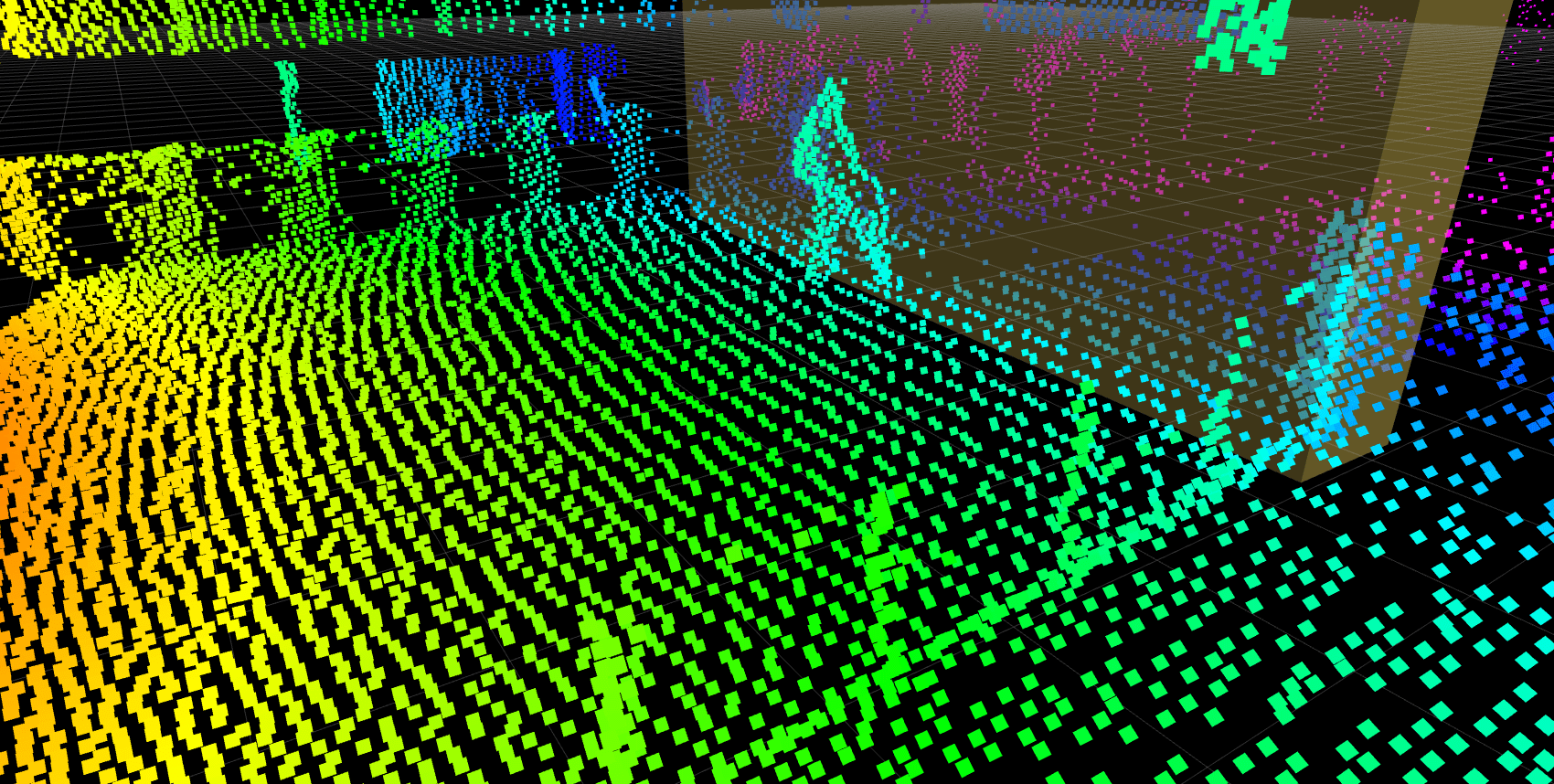

The background subtraction algorithm that enables on-device motion detection uses a configurable number of frames of reference to estimate static points (background) in the scene. Once the reference frames are processed, it removes all static points from the point cloud by comparing the background model to the active frame and obtains the non-static points (foreground).

Points in the active frame that deviate significantly from the background are considered to be moving objects. These “foreground” points are then further processed for both object localization and tracking as well as on-device motion detection for various use cases.

The on-device motion detection feature using the background subtraction algorithm can be of great benefit for host of applications, such as the following:

On-Device Motion Detection for People Counting

People counting is useful in various environments, such as airports, retail stores, universities, banks, recreation facilities – basically any place where intelligent customer and operational insights would be required.

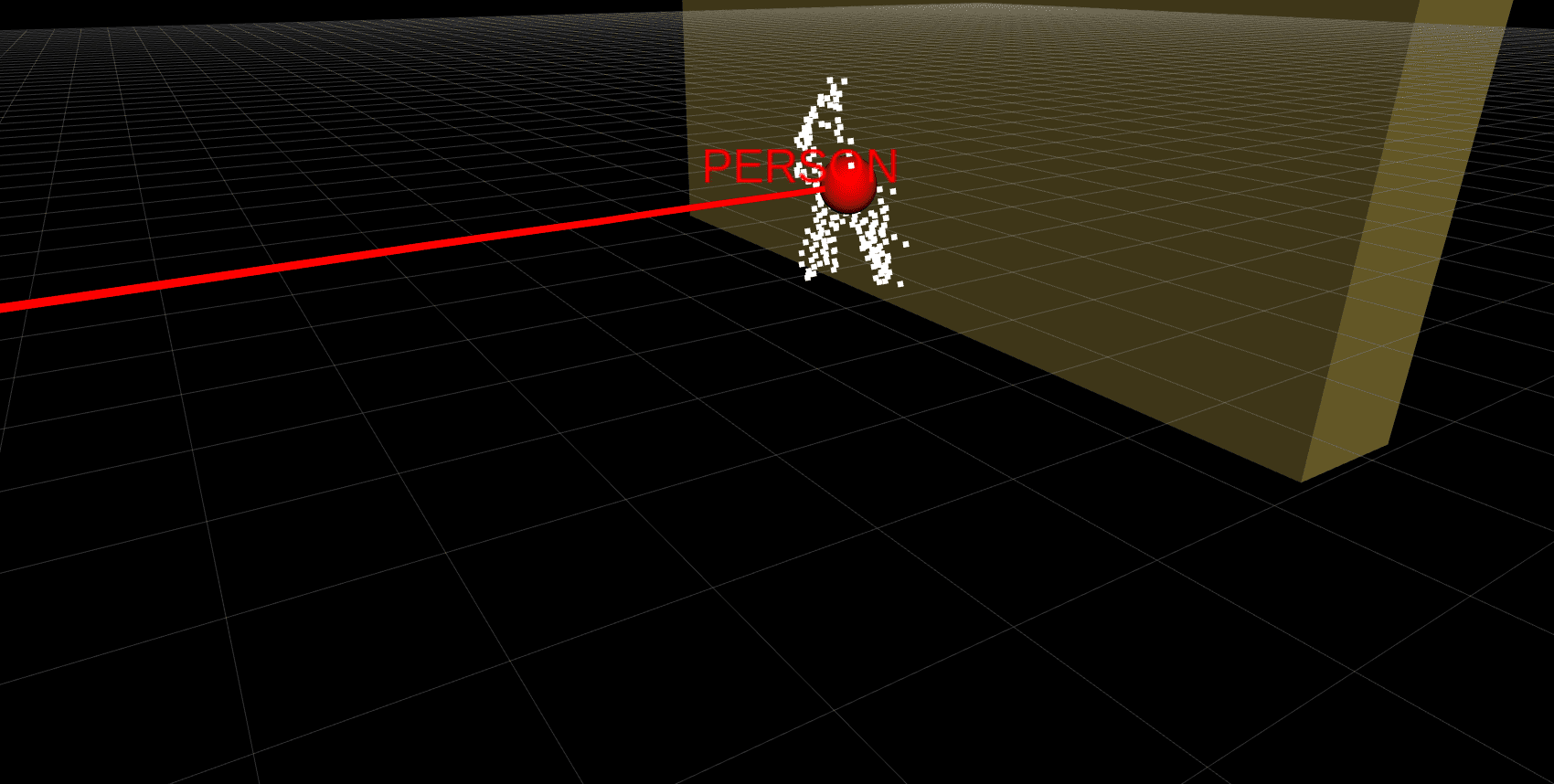

Calculating the number of people passing through a particular zone could be vital for improving operations and optimizing the utilization of resources. For example, in the use case below, LiDAR sensors can be installed at the entry point of a supermarket to create a 3D vision of the surroundings and capture data points of the entire field of view.

A zone could be created to count the total number of people visiting the store. This automated tracking would, for instance, help in precisely calculating the staff requirements during different operational periods, and help in cutting down operational costs. However, the data feed generated would also contain many static objects such as doors, counters, grocery shelves, etc. Using the background subtraction algorithm, only data containing the person and the zone would be transferred over the connection, thus dramatically cutting down the load on the network resources.

To be precise, in this case, the total amount of data before background subtraction was ~3 MB/s per sensor, which was reduced to ~0.2Mb/s per sensor. Further pre-processing through clustering of the points from the foreground generates actionable insights, and the point cloud is identified as a person with the data stream reduced to ~0.01 MB/s per sensor, leading to a nearly 99% of reduction in the total data to be transferred.

On-Device Motion Detection for Traffic Surveillance

LiDAR-based traffic monitoring systems are very promising since they provide real-time and anonymized 3D data. This implies that while the system can, for example, differentiate between a pedestrian or a cyclist in mixed traffic situations, identifying the individuals is not possible, thus protecting the privacy of road users. More nuanced applications, such as obtaining different roadway users’ movement data and other metrics such as average speed per lane, vehicle count per lane, vehicle dwell time, people count, and average speed of the pedestrians can all be calculated using LiDARs for traffic surveillance systems. But traffic surveillance also generates vast amounts of data at every instant, meaning there is a pressing need for smart solutions to reduce the computational effort.

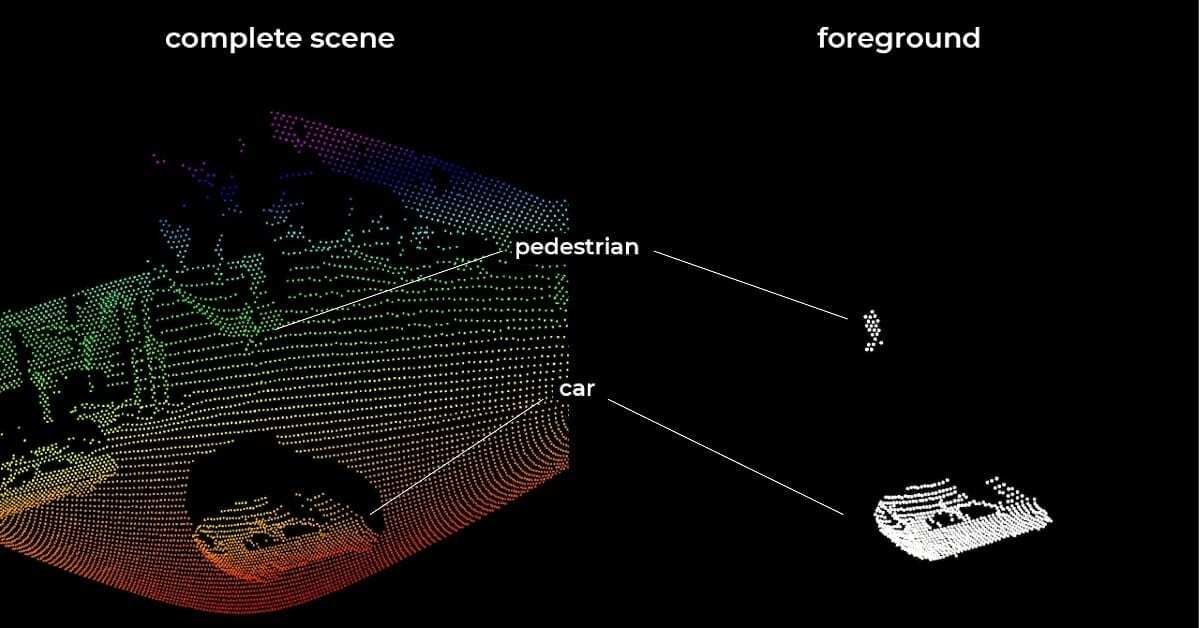

Let’s analyze a typical traffic junction monitored using our smart LiDARs. A point cloud would consist of not only the vehicles and pedestrians approaching an intersection, but also static objects in and around the scene, such as the pavements, roads, and buildings, as seen in the “complete scene”.

But the smart LiDAR’s on-device data analysis capabilities would identify and remove the static objects from the background, resulting in only the foreground dynamic objects such as the pedestrian and the moving car, labeled as the “foreground”.

This step can significantly reduce the amount of data to be processed and thus improve the accuracy and speed of object identification while reducing the load on the network when transferring the data.

LiDAR technology moving forward

LiDARs are emerging as one of the most promising sensor-based technologies and are defining the future of smart applications by offering spatial awareness capabilities with pinpoint accuracy. Blickfeld’s Smart LiDARs have taken that to the next level through quick and simplified extraction of actionable insights from point clouds using the pre-processing algorithms.

Starting with the dynamic motion detection algorithm, the on-device background subtraction has already proved its worth in many projects. And with the pre-processing algorithm library expanding, many opportunities for more complex interpretation of the environment and use cases will open up, thereby increasing the accessibility and utility of LiDAR sensors for all users.